Sometimes we need to check every link on the site and see that they all work, and this question came up a couple of times on the JMeter forum

'How do I use JMeter to spider my site?'But before we go into the solutions, lets take a step back and see the reasons behind wanting to spider the site or

skip to solutiona. You want to find out whether any urls respond with a 404. This isn't really a task for JMeter and there are various open source/free link checkers that one might use so there really isn't a need to run JMeter to solve this class of problems (

http://java-source.net/open-source/crawlers for just spiders in Java. There are others too like

Xenu or

LinkChecker)

b. You want to generate some sort of background load and you hit upon this technique. A spider run with a specific number of threads will provide the load. While a valid scenario, this doesn't really simulate what the users are doing on the site. So it goes back to what are you trying to simulate?. It's much better to simulate actual journeys with representative loads. You might need to study your logs and your webserver monitoring tools to figure this out. It's tougher to do this, but it's more useful.

c. You want to simulate the behavior of an actual spider (like Google) and see how your site responds, whether all the pages are reachable. See a.

Other problemsA test without assertions is pretty much useless. A spidering test by its nature is difficult to assert (other than response code = 200! and perhaps the page does not contain the standard error message shown).

JMeter does not really provide good out of the box support for spidering. The documents refer to an HTML Link Parser which can be used for spiders which leads some users to try it out and complain that it doesn't work. It does(

see this post) but not how you expect, and not as a spider (The reference manual needs to change).

Before we go on to trying to implement an actual Spider in JMeter, lets see some alternatives that we have (using JMeter and not a third party tool).

a. Most sites have a fixed set of URL's and a possible dynamic set e.g. a Product Catalog where each product maps to a row in the database. It is easy enough to write a query that fetches these (using a JDBC Sampler) and generating a CSV file that contains the URL's you want. The JDBC sampler is followed by a Thread Group (number of threads the spider will run) which reads each URL from the CSV. This is especially useful when you consider that it is quite possible that some links are not accessible from any other link in the site (This is bad site design, but exists , for e.g. FAQ are not browsable on my current site, they must be searched for which means that there is no URL from which the FAQ is linked to and a spider would never find them directly)

b. Some sites generate a sitemap (it may even be a sitemap that is used for Google) for the reasons mentioned above. It is trivial to parse this to obtain all the urls. A stylesheet can convert this into a CSV and the rest is the same as point a.

One last thing before we start discussing JMeter solutions. The first time I came to know anything about how spiders work is when I ran

Nutch locally(and later refined with the knowledge of MapReduce).

In a simplified form

a. A first stage reads URLs that are pending from the buffer/queue and downloads the content. This is multithreaded but not too much so as to not bring down the site.

b. The second stage parses the contents for links and feeds it into the same buffer and queue.

c. A third stage indexes the content for search. This is irrelevant for our tests.

A related concept is that of depth. i.e. In how many clicks(minimum) does it take to reach the link from the root/home/starting point of the website.

Attempt 1.Using the previous depth definition, most sites(because of menu's and sitemaps) need at the most 5-7 clicks to reach any page from the root page (kind of like Kevin Bacon's six degrees of separation). This implies that instead of a generic solution we could have a hardcoded solution which fixes the depth that we look at and use the time tested method of Copy - Paste.

Here's what this solution would like

The test plan is configured to run the Thread Groups serially

1. Thread Group

L0 fetches all the urls listed in a file named L_0.csv. Each request is attached to beanshell listener which parses the response to extract all anchors and writes these anchors to a separate temp file. The code which does this is lifted from AnchorModifier and is accessed via a Beanshell script calling a Java class(

JMeterSpiderUtil).

2. Thread Group

L0 Consolidate (single thread) creates a unique set of all the urls from the temporary files created in step 1 and subtracts the urls already fetched from L_0.csv and writes these urls to a file named L_1.csv. This code is also in the Java class and is described below.

3. Thread Group

L1 (multi thread) fetches all the urls listed in the file L_1.csv which was created in step. Each request is attached to beanshell listener which parses the response to extract all anchors and writes these anchors to a separate temp file.

4. Thread Group

L1 Consolidate (single thread) creates a unique set of all the urls from the temporary files created in step 3 and subtracts the urls already fetched from L_0.csv,L_1.csv and writes these urls to a file named L_2.csv

... and so on for any number of levels/depths that you want.

If you are any sort of developer , you are probably groaning at the above. "Hasn't this guy heard about loops? What about maintaining these tests? Are we going to make any changes in 5 places ?".

We could use Module Controllers to reuse most of the test structure but it's still inelegant.

One of the reasons I've described the above is that even if the solution looks inelegant it is easy to understand and doesn't take time to implement, which means you can start testing your site pretty quickly. Note that your priority is the testing of the site , not the elegance of the testing script.

Attempt 2Lets now see if we can increase the elegance of the script. One of the problems we run into is that the CSV data set config can't use variable names for the filename. Another problem is that in the solution above we run the Thread Groups serially and we use a single thread in a thread group to combine the results. If we want to use a single looped thread group we have to ensure only 1 thread does the combining which needs to wait for all the other threads to complete. You can probably simplify this solution by extending the CSV data set config or the looping controllers, I don't consider these approaches because I have no Swing experience at all , so the only ways I extend JMeter are via BeanShell or Java.

After some experimentation this is the solution that I've come up with

1. The Loop Controller controls the depth/level

2. The simple controller has an If controller that is only true for the thread with threadnumber 1. It defines the current level and copies the file L_${currentlevel}.csv to urls.csv

3. The wait for everyone is configured with a synchronizing timer (same as total number of threads in a threadgroup) so that all the threads are waiting till the first thread has finished in step 2

4. The while controller iterates over all the urls in the csv. The CSV Data Set is configured to read the copied urls.csv file (since we cannot make the name variable). What we will do in the subsequent steps is recreate this same file with new data. Each request is attached to beanshell listener which parses the response to extract all anchors and writes these anchors to a separate temp file. The code which does this is lifted from AnchorModifier and is accessed via a Beanshell script calling a Java class(

JMeterSpiderUtil).

5. We have a copy of step 2 here, all the threads wait till everyone else is done (for that level only)

6. The If controller ensures that the consolidation is done only for the first thread, all the files written in step 4 are combined into a unique set, all the urls already processed are subtracted and a new file L_${nextlevel}.csv is written. Properties are set so that the ${currentlevel} now is the ${nextlevel} so that step 1 will now pick up this new file and copy it as urls.csv for the CSVDataSetConfig to pick up.

7. The Reset Property Bean Shell sampler is used to reset the CSV Data Set Config

FileServer server = FileServer.getFileServer(); // get the File Server

server.closeFiles(); // close everything

server.reserveFile("../spider/urls/urls.csv", null, "../spider/urls/urls.csv"); //reregister the CSV, we have chosen sharing mode as All Threads to avoid copying the alias name generation in CSVDataSet.java

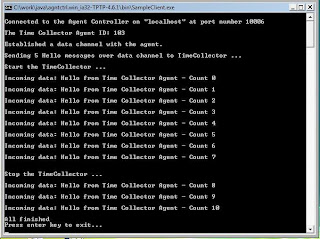

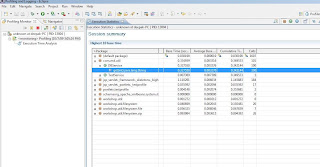

This was run with a root of http://jakarta.apache.org/jmeter/index.html.

Only urls with jmeter in them were spidered and with the jakarta.apache.org host.

Level 1 - 17 urls

Level 2 - 29 urls

Level 3 - 125 urls

Level 4 - 833 urls

Level 5 - 2 urls

Level 6 - 0 urls

And I did get some failures too e.g.

http://jakarta.apache.org/jmeter/$next

http://jakarta.apache.org/jmeter/$prev

So I guess the test is successful because it found some issues!.

Which means there are no more urls that satisfy our criteria. You could change the loop to a while controller and use this condition to check whether or not the test should exit. However some sites generate unique urls (e.g. by appending a timestamp) which makes it possible that your test might not exit , so you should normally have a safety for maximum depth.

Is attempt 2 more elegant? Probably , but also less configurable and took about 2-3 days to get it working and needed some study of JMeter source code. Note that the previous solution could vary the number of threads available to each Thread Group but this can't. However by using the constant throughput timer , you can achieve variable throughput for different levels.

JMeterSpiderUtil.javaThe major part of this code is from AnchorModifier

Important snippets are shown

if(isExcluded(fetchedUrl) ) //excludes stuff like PDF/.jmx files which cant be parsed

...

(Document) HtmlParsingUtils

.getDOM(responseText.substring(index)); // gets a DOM from the request

...

NodeList nodeList = html.getElementsByTagName("a"); //gets the links

...

HTTPSamplerBase newUrl = HtmlParsingUtils.createUrlFromAnchor( hrefStr, ConversionUtils.makeRelativeURL(result .getURL(), base)); //get the url

...

if(allowedHost.equalsIgnoreCase(newUrl.getDomain())) {

String currUrl = newUrl.getUrl().toString();

if(matchesPath(currUrl)) {

//currUrl = stripSessionId(currUrl);

//currUrl = stripTStamp(currUrl);

fw.write(currUrl + "\n");

}

}

//checks whether the host is the one we are interested in, whether the path is one that we want to spider, could strip out session ids or timestamp parameters in the url

...

Download CodeSpiderTest - Attempt 1.

SpiderTest2 - Attempt 2.

JMeterSpiderUtil - Java utility.

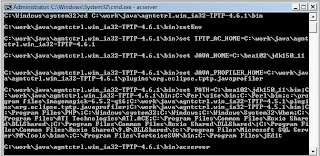

If you want to use the code

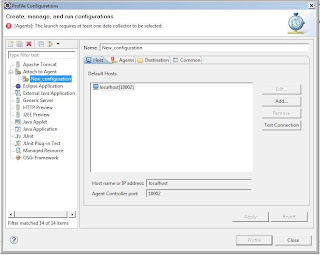

a. Ensure that the total number of Threads is specified correctly in both synchronizing timers (use a property)

b. Some directories are hardcoded. I used a directory named scripts under jmeter home, another directory called spider at the same level as scripts. Scripts has two sub directories temp and urls. L_0.csv the starting point is copied into urls.

c. If you want to rerun the test ensure you delete all directories under temp and all previously generated csv files in urls (except for L_0.csv.

d. You might have to change the java code to further filter urls /improve the code. The Jmeter path regular expression is hardcoded

e. You have to change the allowedHost , probably to an allowable list rather than a single value.

f. You probably have to honor robots.txt

g. You might want to check the fetch embedded resources or change what urls are considered to be fetched (currently only anchors no forms or ajax urls based on a pattern)

Note that the code is extremely inefficient and was only written to check if what I theorized in

http://www.mail-archive.com/jmeter-user@jakarta.apache.org/msg27108.html was possible

There is a lot of work to properly parameterise this test , but hopefully this can get you started.

Code available here