Edit : Latest experiments with JMeter and graphs http://theworkaholic.blogspot.com/2015/05/graphs-for-jmeter-using-elasticsearch.html

One of the few features lacking in JMeter is when you run the tests from the command line, the out of box reports are restricted to a stylesheet that generates a summary report.

There are workarounds, you could load the result into Excel (small files) , or you could parse the log file and use JFreeChart to generate the graphs which is what I did. See examples

The following is an explanation of the mechanisms I used. These are probably not going to be out of the box , but hopefully they will be useful to someone who can customise it. The samples are also meant to be used by developers, so if you are a Tester with little or no coding experience, get a developer from your team to help.

I haven't looked closely at the JMeter parsing details, but you don't need to the details to use the JMeter classes (which in my opinion is a hallmark of a well designed system). There are two important files , the saveservice.properties and jmeter.properties which I have copied to a different location from the JMeter home so that I could modify them if I needed to.

The basic code for parsing using JMeter classes(using the JMeter API) is

Visualizer is a simple strategy pattern. I've some sample implementations for LineChartVisualizer, StackedBarChartVisualizer, MinMaxAvgGraphVisualizer respectively to draw a line chart for each response, a Stacked chart (latency plus response) or a line chart showing Min,Max, Avg along with the response time.

If we take a quick look at the LineChartVisualizer code , its pretty straightforward, it simply uses the JFreeChart API and populates the data from the SampleResult. Note that the line chart objects would use memory proportional to the number of samples

We can change the data some graphs show by using the Decorator pattern. One decorator LabelFilterVisualizer is shown.

This class filters out labels and only delegates those that satisfy the criteria. The writing of the image is delegated to the decorated OfflineVisualizer

We can also use the composite pattern(CompositeVisualizer) to have multiple graphs generated with a single pass through the result log file.

Finally we can use all the above to process multiple files , for e.g. when we want to show trends across multiple runs with varying thread counts.

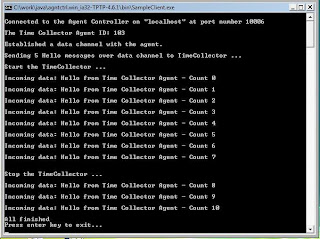

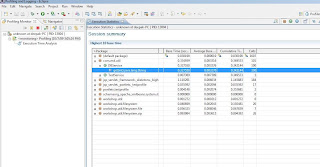

Here's a sample that I ran. A single thread hits 3 pages on the apache website in a loop.

Response times are plotted against each label (without considering the thread).

The next example filters out only the Component reference request and plots the response time, the minimum time, the maximum time and the average time for this request. You could extend this to indicate the median or the 90th percentile.

The code for this graph is

The next chart shows a stacked chart which splits the response time for the Component reference into latency and the rest of the time.

We could also run all these graphs at the same time using the Composite

I also reran the same test for 1, 3,5,7 and 10 threads. Using the classes above and a new throughput visualizer (where I calculate throughput as total number of requests / total time the test ran) and plotted the throughput v/s the number of threads

The above examples are not exhaustive and probably wont work for you (for e.g. threads are ignored, thread groups are ignored, and these might have meaning for your test). However you should be able to use this to write your own implementation.

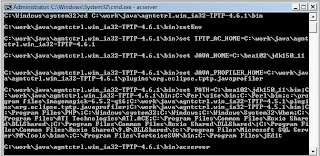

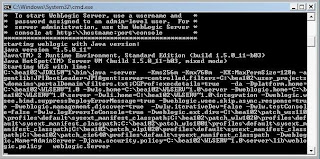

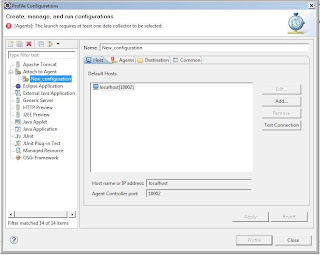

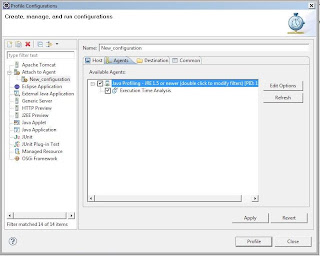

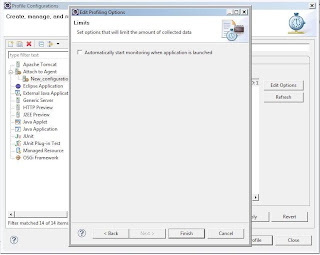

Running the code.

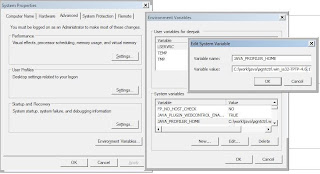

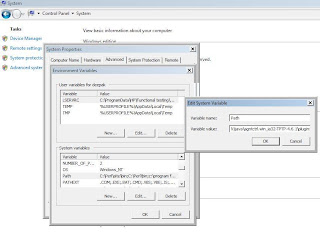

a. Download the code. This is an eclipse workspace. To get this to compile, you need to define two variables in eclipse (JMETER_HOME and JFREECHART_HOME) for the classpath. Modify config.properties to whatever is applicable for your system. Use GraphClient to see the samples

I created an additional dummy directory for Jmeter home and created a bin directory under it and copied jmeter.properties and saveservice.properties.

b. Change the client to use the visualizers you want. The sample client should give you some idea. Or create a new visualizer

c. Compile and run! If you use a different IDE or want to use ANT it should be pretty straight forward. The source code has been written and tested on Java 1.5 . There isn't any 1.5 feature I use except generics and the new for loop syntax. You could change this to be 1.4 compatible.

Further work

a. Combining results from multiple files into a single run.

b. Making the visualizers configurable

c. Canned HTML reports

d. Threads/ThreadGroups

e. Determine limits for the graphs.

f. Support custom attributes

If there are specific graph requests , I might take a look into it, day job and wife willing.

One of the few features lacking in JMeter is when you run the tests from the command line, the out of box reports are restricted to a stylesheet that generates a summary report.

There are workarounds, you could load the result into Excel (small files) , or you could parse the log file and use JFreeChart to generate the graphs which is what I did. See examples

The following is an explanation of the mechanisms I used. These are probably not going to be out of the box , but hopefully they will be useful to someone who can customise it. The samples are also meant to be used by developers, so if you are a Tester with little or no coding experience, get a developer from your team to help.

I haven't looked closely at the JMeter parsing details, but you don't need to the details to use the JMeter classes (which in my opinion is a hallmark of a well designed system). There are two important files , the saveservice.properties and jmeter.properties which I have copied to a different location from the JMeter home so that I could modify them if I needed to.

The basic code for parsing using JMeter classes(using the JMeter API) is

SaveService.loadTestResults(FileInputStream, ResultCollectorHelper);add (SampleResult sampleResult)public Object writeOutput() throws IOException

Visualizer is a simple strategy pattern. I've some sample implementations for LineChartVisualizer, StackedBarChartVisualizer, MinMaxAvgGraphVisualizer respectively to draw a line chart for each response, a Stacked chart (latency plus response) or a line chart showing Min,Max, Avg along with the response time.

If we take a quick look at the LineChartVisualizer code , its pretty straightforward, it simply uses the JFreeChart API and populates the data from the SampleResult. Note that the line chart objects would use memory proportional to the number of samples

//adds a sample. JFreechart uses a TimeSeries object into which we set each data item

public void add(SampleResult sampleResult) {

String label = sampleResult.getSampleLabel();

TimeSeries s1 = map.get(label);

if (s1 == null) {

s1 = new TimeSeries(label);

map.put(label, s1);

}

long responseTime = sampleResult.getTime();

Date d = new Date(sampleResult.getStartTime());

s1.addOrUpdate(new Millisecond(d), responseTime);

}

//uses JFreeChartAPI to write the data into an image file

public Object writeOutput() throws IOException {

TimeSeriesCollection dataset = new TimeSeriesCollection();

for (Map.Entry<String, TimeSeries> entry : map.entrySet()) {

dataset.addSeries(entry.getValue());

}

JFreeChart chart = createChart(dataset);

FileOutputStream fos = null;

try {

fos = new FileOutputStream(fileName);

ChartUtilities.writeChartAsPNG(fos, chart, WIDTH, HEIGHT);

} finally {

if (fos != null) {

fos.close();

}

}

return null;

}

//use the JFreeChart API to generate a Line Chart

private static JFreeChart createChart(XYDataset dataset) {

JFreeChart chart = ChartFactory.createTimeSeriesChart("Response Chart", // title

"Date", // x-axis label

"Time(ms)", // y-axis label

dataset, // data

true, // create legend?

true, // generate tooltips?

false // generate URLs?

);

chart.setBackgroundPaint(Color.white);

XYPlot plot = (XYPlot) chart.getPlot();

plot.setBackgroundPaint(Color.lightGray);

plot.setDomainGridlinePaint(Color.white);

plot.setRangeGridlinePaint(Color.white);

plot.setAxisOffset(new RectangleInsets(5.0, 5.0, 5.0, 5.0));

plot.setDomainCrosshairVisible(true);

plot.setRangeCrosshairVisible(true);

XYItemRenderer r = plot.getRenderer();

if (r instanceof XYLineAndShapeRenderer) {

XYLineAndShapeRenderer renderer = (XYLineAndShapeRenderer) r;

renderer.setBaseShapesVisible(true);

renderer.setBaseShapesFilled(true);

renderer.setDrawSeriesLineAsPath(true);

}

DateAxis axis = (DateAxis) plot.getDomainAxis();

axis.setDateFormatOverride(new SimpleDateFormat("dd-MMM-yyyy HH:mm"));

return chart;

}

We can change the data some graphs show by using the Decorator pattern. One decorator LabelFilterVisualizer is shown.

/**

* decorates the visualizer by filtering out labels

*/

public void add(SampleResult sampleResult) {

boolean allow = labels.contains(sampleResult.getSampleLabel());

if (!pass) {

allow = !allow;

}

if (allow) {

visualizer.add(sampleResult);

}

}

/**

* delegates to the decorated visualizer

*

* @return whatever the decorated visualizer returns

*/

public Object writeOutput() throws IOException {

return visualizer.writeOutput();

}This class filters out labels and only delegates those that satisfy the criteria. The writing of the image is delegated to the decorated OfflineVisualizer

We can also use the composite pattern(CompositeVisualizer) to have multiple graphs generated with a single pass through the result log file.

/**

* adds the sample to each of the composed visualizers

*/

public void add(SampleResult sampleResult) {

for (OfflineVisualizer visualizer : visualizers) {

visualizer.add(sampleResult);

}

}

/**

* @return a List of each result from the composed visualizer

*/

public Object writeOutput() throws IOException {

List<Object> result = new ArrayList<Object>();

for (OfflineVisualizer visualizer : visualizers) {

result.add(visualizer.writeOutput());

}

return result;

}Finally we can use all the above to process multiple files , for e.g. when we want to show trends across multiple runs with varying thread counts.

/**

* parses each file

*

* @throws Exception

*/

public void parse() throws Exception {

// One day we might multithread this

for (String file : files) {

ResultCollector rc = new ResultCollector();

TotalThroughputVisualizer ttv = new TotalThroughputVisualizer();

visualizers.add(ttv);

ResultCollectorHelper rch = new ResultCollectorHelper(rc, ttv);

XStreamJTLParser p = new XStreamJTLParser(new File(file), rch);

p.parse();

}

}

/**

* Gets the resulting throughput from each file and combines them

*

* @return always returns null

* @throws IOException

*/

public Object writeOutput() throws IOException {

XYSeries xyseries = new XYSeries("throughput");

for (AbstractOfflineVisualizer visualizer : visualizers) {

Throughput throughput = (Throughput) visualizer.writeOutput();

xyseries.add(throughput.getThreadCount(), throughput

.getThroughput());

}

XYSeriesCollection dataset = new XYSeriesCollection();

dataset.addSeries(xyseries);

JFreeChart chart = createChart(dataset);

FileOutputStream fos = null;

try {

fos = new FileOutputStream(fileName);

ChartUtilities.writeChartAsPNG(fos, chart, WIDTH, HEIGHT);

} finally {

if (fos != null) {

fos.close();

}

}

return null;

}

Here's a sample that I ran. A single thread hits 3 pages on the apache website in a loop.

Response times are plotted against each label (without considering the thread).

File f = new File(JMETER_RESULT_FILE);

ResultCollector rc = new ResultCollector();

LineChartVisualizer v = new LineChartVisualizer(OUTPUT_GRAPH_DIR + "/LineChart.png");

ResultCollectorHelper rch = new ResultCollectorHelper(rc, v);//this is the visualizer we want

XStreamJTLParser p = new XStreamJTLParser(f, rch);

p.parse();

v.writeOutput(); //write the outputThe next example filters out only the Component reference request and plots the response time, the minimum time, the maximum time and the average time for this request. You could extend this to indicate the median or the 90th percentile.

The code for this graph is

File f = new File(JMETER_RESULT_FILE);

ResultCollector rc = new ResultCollector();

MinMaxAvgGraphVisualizer v = new MinMaxAvgGraphVisualizer(OUTPUT_GRAPH_DIR + "/MinMaxAvg.png");

String[] labels = {"Component reference"}; //we only want this label

LabelFilterVisualizer lv= new LabelFilterVisualizer(Arrays.asList(labels), v);//decorate the MinMaxAvgGraphVisualizer

ResultCollectorHelper rch = new ResultCollectorHelper(rc, lv);//use the decorated visualizer

XStreamJTLParser p = new XStreamJTLParser(f, rch);

p.parse();

lv.writeOutput();//write it outThe next chart shows a stacked chart which splits the response time for the Component reference into latency and the rest of the time.

File f = new File(JMETER_RESULT_FILE);

ResultCollector rc = new ResultCollector();

StackedBarChartVisualizer v = new StackedBarChartVisualizer(OUTPUT_GRAPH_DIR + "/StackedBarChart.png");

String[] labels = {"Component reference"};//we only want this label

LabelFilterVisualizer lv= new LabelFilterVisualizer(Arrays.asList(labels), v);//Decorate the StackedBarChartVisualizer

ResultCollectorHelper rch = new ResultCollectorHelper(rc, lv);

XStreamJTLParser p = new XStreamJTLParser(f, rch);

p.parse();

lv.writeOutput(); //write the outputWe could also run all these graphs at the same time using the Composite

File f = new File(JMETER_RESULT_FILE);

ResultCollector rc = new ResultCollector();

LineChartVisualizer lcv = new LineChartVisualizer(OUTPUT_GRAPH_DIR + "/AllLineChart.png");

StackedBarChartVisualizer sbv = new StackedBarChartVisualizer(OUTPUT_GRAPH_DIR + "/AllStackedBarChart.png");

MinMaxAvgGraphVisualizer mmav = new MinMaxAvgGraphVisualizer(OUTPUT_GRAPH_DIR + "/AllMinMaxAvg.png");

String[] labels = {"Component reference"};

LabelFilterVisualizer lv= new LabelFilterVisualizer(Arrays.asList(labels), sbv);//decorate

LabelFilterVisualizer lv2= new LabelFilterVisualizer(Arrays.asList(labels), mmav);//decorate

OfflineVisualizer[] vs = {lcv, lv,lv2};//use these 3 visualizers

CompositeVisualizer cv = new CompositeVisualizer(Arrays.asList(vs));//create a composite

ResultCollectorHelper rch = new ResultCollectorHelper(rc, cv);

XStreamJTLParser p = new XStreamJTLParser(f, rch);

p.parse();

cv.writeOutput();//the composite will delegate to each visualizer

I also reran the same test for 1, 3,5,7 and 10 threads. Using the classes above and a new throughput visualizer (where I calculate throughput as total number of requests / total time the test ran) and plotted the throughput v/s the number of threads

String [] files = {JMETER_RESULT_DIR + "/OfflineGraphs-dev-200912311310.jtl", JMETER_RESULT_DIR + "/OfflineGraphs-dev-200912311312.jtl",JMETER_RESULT_DIR + "/OfflineGraphs-dev-200912311315.jtl",JMETER_RESULT_DIR + "/OfflineGraphs-dev-200912311316.jtl",JMETER_RESULT_DIR + "/OfflineGraphs-dev-200912311318.jtl"};

MultiFileThroughput mft = new MultiFileThroughput(Arrays.asList(files),OUTPUT_GRAPH_DIR + "/Throughput.png");

mft.parse();

mft.writeOutput();The above examples are not exhaustive and probably wont work for you (for e.g. threads are ignored, thread groups are ignored, and these might have meaning for your test). However you should be able to use this to write your own implementation.

Running the code.

a. Download the code. This is an eclipse workspace. To get this to compile, you need to define two variables in eclipse (JMETER_HOME and JFREECHART_HOME) for the classpath. Modify config.properties to whatever is applicable for your system. Use GraphClient to see the samples

I created an additional dummy directory for Jmeter home and created a bin directory under it and copied jmeter.properties and saveservice.properties.

b. Change the client to use the visualizers you want. The sample client should give you some idea. Or create a new visualizer

c. Compile and run! If you use a different IDE or want to use ANT it should be pretty straight forward. The source code has been written and tested on Java 1.5 . There isn't any 1.5 feature I use except generics and the new for loop syntax. You could change this to be 1.4 compatible.

Further work

a. Combining results from multiple files into a single run.

b. Making the visualizers configurable

c. Canned HTML reports

d. Threads/ThreadGroups

e. Determine limits for the graphs.

f. Support custom attributes

If there are specific graph requests , I might take a look into it, day job and wife willing.